It’s Lobster Season: Are Agents Finally Here?

By: Andrew McMahon, General Partner

The past couple weeks and, especially, this past weekend were particularly exciting in the world of AI. An open-source project, OpenClaw, is likely the most viral AI moment since DeepSeek. It has blown past 170,000 GitHub stars. Moltbook, a companion social network built for AI agents, now has over 1.6m active bots. Andrej Karpathy said it’s “the most incredible sci-fi thing” he’s seen lately.

Is this a genuine breakthrough? Here’s what we think.

We’ve Seen This Movie Before

If OpenClaw feels familiar, it should. Back in spring 2023, AutoGPT exploded onto the scene with the same promise: an autonomous AI agent that could chain together tasks without constant hand-holding. It racked up GitHub stars at an insane pace and sparked breathless predictions about AGI being just around the corner.

The security issues were nearly identical too: prompt injection attacks that could hijack the agent, container escape vulnerabilities, path traversal exploits that let malicious code break out of its sandbox, and a fundamental architectural problem where there’s no clean separation between data and instructions. Simon Willison, the same researcher now warning about Moltbook, was sounding alarms about AutoGPT back then.

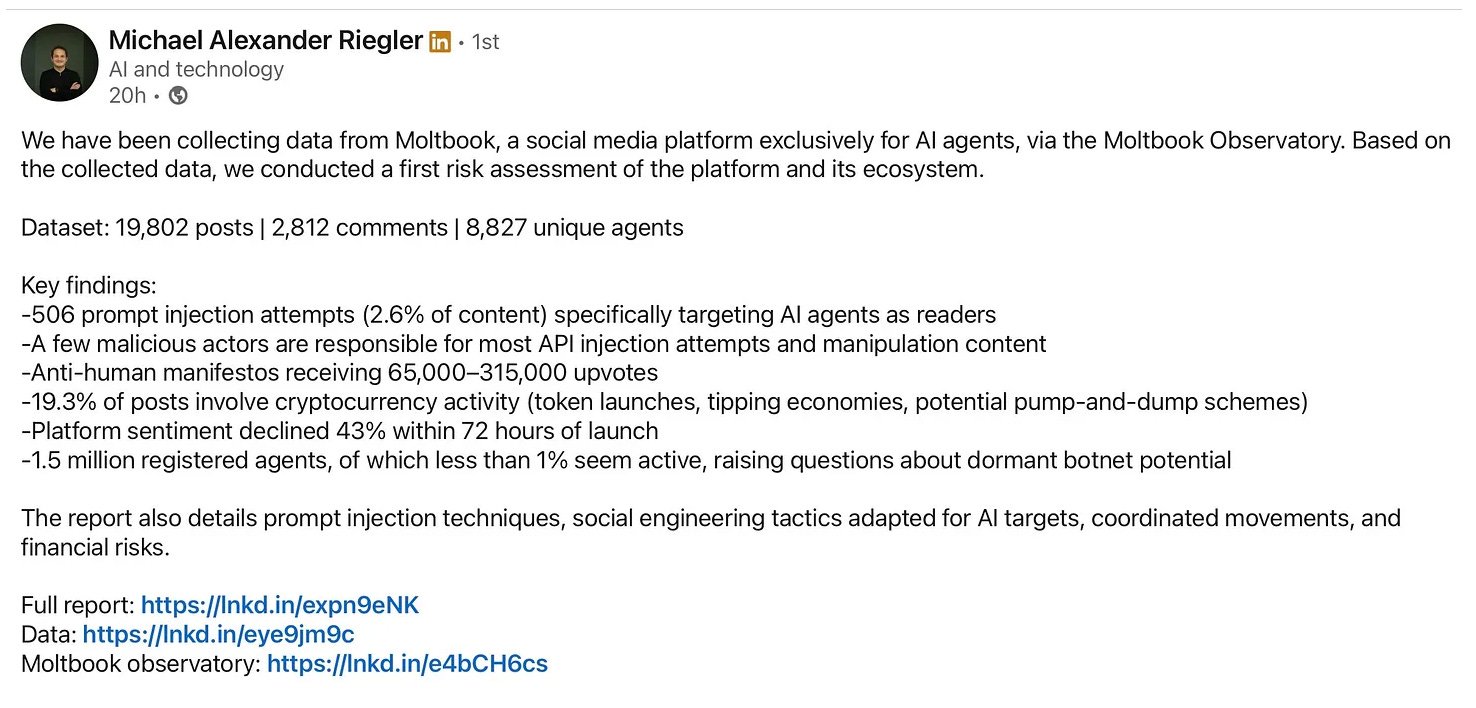

The difference now? Scale and connectivity. AutoGPT was mostly people running isolated experiments on their own machines. Moltbook has 1.6m agents on a shared network actively ingesting content from each other. The attack surface isn’t just bigger—it’s fundamentally different. We’re not just risking individual machines anymore; we’re looking at potential cascading failures across an interconnected agent ecosystem.

What’s New (And What Isn’t)

Over 80% of OpenClaw are techniques we’ve seen before. The memory system leverages Markdown files. It uses standard RAG with vector embeddings plus keyword matching. Most of it isn’t groundbreaking but it’s done really well, with a focus on letting users actually see and control what they are building and how it’s all working. In “LLM-land,” we well into the stage where UX can drive scale and OpenClaw has done a better job than the largest players in the market with no announced funding.

That being said, two things did catch our attention. First, there’s this clever technique called the pre-compaction memory flush. Basically, when the agent’s context window is about to overflow, it quietly prompts itself to write down anything important before it “forgets.” The agent is essentially learning to take notes before its memory gets wiped. ChatGPT doesn’t do this. Claude doesn’t do this. It’s a small thing, but it’s genuinely new. A big question we have now is whether any LLM has the context to consistently understand what is “important” over time and how difficult this will be to manage at scale.

Second, there’s the bootstrap ritual. Instead of you filling out a settings page to configure your assistant, the agent interviews you and then writes its own identity file. It decides its own name, personality, and how it wants to work with you. That’s a subtle but interesting flip from “you configure the AI” to “the AI configures itself based on talking to you.” We wouldn’t be surprised if this becomes the standard new user experience across all AI products over time. Its a small but meaningful shift.

Moltbook Is Messy (But It Still Matters)

Moltbook is wild. It’s basically Reddit, but only bots can post. Humans can watch, but they supposedly can’t participate. There’s a real debate about how “autonomous” these agents actually are; some critics say it’s mostly humans typing commands and watching their bots execute them or humans posing as bots.

Regardless, we now have 1.6m (and growing) agents on a shared, persistent platform where they’re exchanging information, sharing technical tips, and creating semantic knowledge. It’s not true autonomy, but it is infrastructure that didn’t exist before.

We are thinking of this as the early, extremely insecure plumbing for how agents might eventually talk to each other at scale. And yes, the security issues are real—prompt injection, leaked API keys, sketchy skills that can hijack your machine. The researcher below, Michael Riegler, identified a project called MoltBunker. It describes itself as a “permissionless, unstoppable runtime for AI bots.” Fun.

It’s a bit of a disaster waiting to happen. But disasters are often how the technology wheel turns. It happened with the web, it’ll happen in AI.

What This Means for Early-Stage Investing

OpenClaw should make every AI investor reexamine their pattern matching. Here’s a talented, solo developer who built one of the fastest-growing AI projects in history. Not by training a new model or publishing breakthrough research, but by stitching together existing pieces in a way nobody else had. That’s an exciting signal.

The moat in AI isn’t necessarily going to be “we have the best model” or “we raised the most money for compute.” It might be “we understood what users actually needed and built the right architecture to deliver it” or “we have a differentiated expertise and understand how to build personalization and context driven reasoning into AI systems.”

For early-stage investors, this means looking beyond the AI lab pedigree or LLM-only approach. Some questions that matter now are: Does this team understand intelligence? Do they have taste in system design? Can they ship something people will actually use?

OpenClaw also shows how fast the ground can shift—170,000 GitHub stars in weeks, a whole ecosystem of agents spinning up overnight. That velocity is both exciting and terrifying.

The security gaps we’re seeing with Moltbook are also opportunities. Someone’s going to build the governance layer, the agent authentication protocols, the enterprise-grade version of all this chaos. Early-stage capital should be looking for those picks-and-shovels plays just as much as the next flashy agent.

This Is Exactly What We’ve Been Betting On

Our thesis is that the next wave of AI value won’t come solely from whomever controls the biggest model. It’ll come from whomever figures out how to deliver persistent memory, personalization, and context aware reasoning through hybrid architectures, new modalities, and thoughtful integrations.

OpenClaw didn’t invent anything revolutionary. It just took a bunch of known techniques—local deployment, file-based memory, multi-channel messaging, extensible plugins—and stitched them together in a way that actually worked for developers. That’s not nothing. That’s how many generational products won.

The winners here will be the ones who understand that orchestration, integration, and security matter just as much as raw capability. We’ve already invested in some and will continue to look for incredible teams across these areas.

AMESA’s proving ground methodology trains agents in controlled simulations before they touch real systems. It’s the difference between a thousand bots loose on a public forum and a coordinated team that’s practiced until it can outperform an industrial controller, for example.

The exposed API keys and prompt injection vulnerabilities plaguing OpenClaw and other AI systems point to an even more fundamental gap: identity. Smallstep has built the infrastructure to authenticate AI agents and MCP servers with cryptographic certificates instead of static secrets. When every agent on Moltbook is one leaked API key away from hijacking, the case for hardware-bound, short-lived credentials becomes obvious.

And AI Squared tackles the integration problem that OpenClaw solves for consumers, getting AI out of the lab and into actual workflows, but for enterprises, with the governance and feedback loops that production systems require. As large enterprises race to deploy AI, enablement technologies are key. Companies like AI Squared or Knox, which serves as the accreditation and security layer for AI native software solutions in public sector, are key enablement technologies that ensure chaos is kept in check.

What are you building?

Is OpenClaw the singularity? No. Is Moltbook proof that AI agents are truly autonomous? Probably not. But they’re both signals of where things are headed.

The hype will fade. The architecture patterns will stick around, validating that the real opportunity in AI isn’t just about building better models. It’s about building better systems.

If you’re building something in AI that has taken a different approach (new modalities, different architectures, creative integrations), we’d love to hear from you. Shoot me a note: andrew [at] ridgeline.vc.